I am interested in the relationship between #NoEstimates, heuristics and the Kelly criterion.

I first heard of this criterion in a tweet from @duarte_vasco.

It was written in response to another from @galleman.

I’ve found proponents of #NoEstimates to be quiet on what they want to achieve.

This is the first concrete discussion I’ve run across that provides insight into the thinking of #NoEstimates advocates.

In discussing #NoEstimates, I think it important to keep survivorship bias top of mind.

There is evidence that software estimates don’t work well.

There is less evidence that #NoEstimates is the solution.

Is #NoEstimates the solution?

Maybe, maybe not.

It may provide insight on new ways of thinking about software delivery.

The pertinent parts of @galleman’s tweet:

The continued conjecture that decisions can be made in the presence of uncertainty without estimates

…

is simply a fallacy, due to lack of understanding of the principles or willfully intent to deceive (sic)

and @duarte_vasco’s response:

Uncertainty can never be managed by estimates.

Only through survival heuristics like the Kelly criterion.

..

You survive uncertainty, you don’t remove it.

Basic understanding of complex systems.

I love the contradiction:

- decisions can’t be made in the presence of uncertainty without estimates.

- estimates are not a tool for managing uncertainty.

A close look at these in opposition might form the basis of a useful education on #NoEstimates.

Of course, decisions are made in the presence of uncertainty without estimates.

Estimates are a tool to approximate the true value of something.

Their usefulness lies in how closely they approximate that value.

Creating useful estimates is a resource allocation problem: invest or don’t.

If you choose to invest, then the question becomes how much.

The purpose of #NoEstimates is to explore decision making without estimates.

This implies low (zero?) investment in estimating.

Exploration isn’t fallacy.

It’s an attempt to find new and better ways of delivering software.

To accept #NoEstimates is to agree to explore our ability to make decisions without estimates.

What about uncertainty

The lack of certainty, a state of limited knowledge where it is impossible to exactly describe

the existing state, a future outcome, or more than one possible outcome.

Uncertainty is part of the motivation for #NoEstimates.

It it were not we’d have true values equaling estimates and the debate would be over.

Uncertainty is why risk management is a project management best practice.

No argument that there are well defined principles in place for both risk and project management.

The response says to use survial heuristics, like the Kelly criterion.

What is a survial heuristic?

I couldn’t find a definition, so I went with adaptive heuristics and decision making.

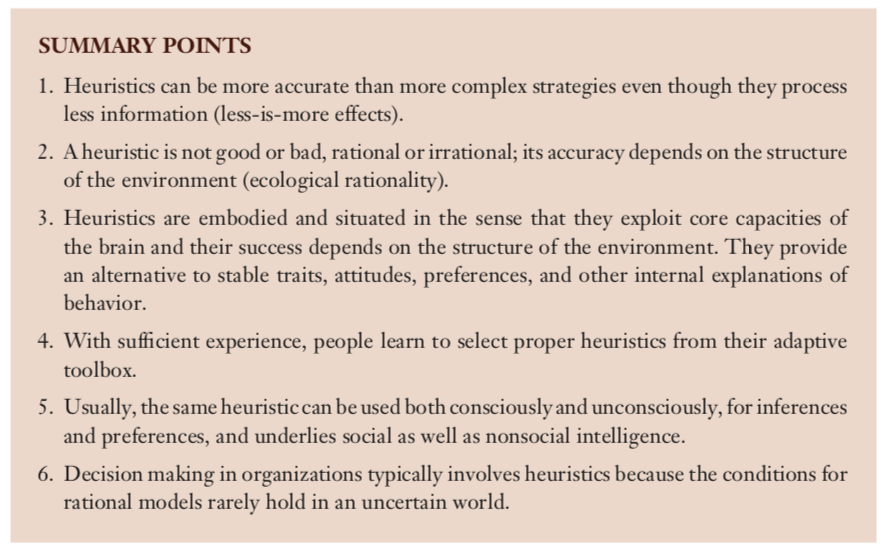

A paper, Heuristic Decision Making, says this:

This research indicates that (a) individuals and organizations often rely on simple heuristics in

an adaptive way, and (b) ignoring part of the information can lead to more accurate judgments than

weighting and adding all information, for instance for low predictability and small samples.

A heuristic:

A strategy that ignores information to make decisions faster, more frugally, and/or more accurately than more complex methods.

Mapping Heuristic Decision Making onto #NoEstimates:

- I liken traditional project management to the description of rationale reasoning.

- I liken #NoEstimates to a heuristic and the description of irrationale reasoning.

Typical thinking says that people often rely upon heuristics but would be better off in terms of accuracy if they did not.

In my view, @galleman rejects the notion of #NoEstimates (heuristics) preferring accuracy (estimates).

This model of rationale reasoning requires knowledge of all relevant alternatives, their consequences and probability and a predictable world without surprises.

Bayesian decision theory calls these situations small worlds.

A large world is missing relevant knowledge or has to be estimated from small samples.

This means the conditions of rational decision theory are not met.

In large worlds, one cannot assume that rationale models automatically provide the correct answer.

They may provide incorrect answers.

This situation leads to less-is-more effects:

when less information leads to better results than more information.

Part of the debate between traditional project management and #NoEstimates lies in the notion that heuristics can outperform sophisticated models.

It is incorrect to assume that project management, as viewed through the rationale reasoning model, is ill-equiped to deal with large world problems.

That’s why it exists–to deal with large world problems.

The interesting question is whether #NoEstimates can develop heuristics to achieve the less-is-more effect.

The paper describes that when heuristics are formailzed certain large worlds lend themselves to simple heuristics that provide better results than standard statistical methods.

(The paper contains examples of heuristics used in large world problems.)

There is a point where more is not better.

In my view, @duarte_vasco views #NoEstimates this way.

The paper pursues two reasearch questions:

- Description: which heuristics do people use in which situations?

- Precription: when should people rely on a given heuristic rather than a complex strategy to make more accurate judgements?

These questions are aligned with the questions #NoEstimates should answer.

The great thing about this paper is that it goes on to describe a framework that could be applied to #NoEstimates:

- The Adaptive Toolbox:

the cognitive heuristics, their building blocks (e.g., rules for search, stopping, decision), and the core capacities (e.g., recognition memory) they exploit.

- Ecological Rationality:

investigate which environments a given strategy is better than other strategies (better—not best—because in large worlds the optimal strategy is unknown).

Ecological rationality is a reason why @galleman and @duarte_vasco are in opposition.

I bet the opposition comes from implied differences in environments.

(@galleman’s LinkedIn profile implies a regulated work environment; @duarte_vasco’s less so.)

The paper discusses many different models of heuristics.

A compelling model in relation to my understanding of #NoEstimates is the 1/N Rule:

Another variant of the equal weighting principle is the 1/N rule, which is a simple heuristic for the allocation of resources (time, money) to N alternatives:

- 1/N rule: Allocate resources equally to each of N alternatives.

- This rule is also known as the equality heuristic.

It is also applicable to investment, which brings in the Kelly criterion:

a formula used to determine the optimal size of a series of bets in order to maximise the logarithm of wealth.

In most gambling scenarios, and some investing scenarios under some simplifying assumptions, the Kelly strategy will do

better than any essentially different strategy in the long run (that is, over a span of time in which the observed fraction

of bets that are successful equals the probability that any given bet will be successful).

The connection to @duarte_vasco’s tweet and the Kelly criterion is weakly tied to the 1/N rule.

The connection arises from the simliarity in placing bets or investments with the 1/N rule.

I can’t find a connection between the Kelly criterion and project planning.

I looked into whether there was a connection between the Markov process the Kelly criterion or project managment and didn’t come up with anything I could put together.

I’m stumped on the connection between the Kelly criterion and #NoEstimates, beyond the fact that it’s a heuristic.

If you’ve made the connection, please let me know!

What about complex systems?

@duarte_vasco’s implies that uncertainty is a property of complex systems and that heuristics are a way of dealing with these systems.

Ok.

A summary of Heuristic Decision Making:

In all, I see a place for traditional project planning and #NoEstimates.

The challenge for #NoEstimate advocates is to educate on their insight in relation to the heuristics they develop and importantly

when to apply them and which domains they are best suited.

I’ll leave this as a call to action for the #NoEstimates community.

Help:

- identify and characterize the toolkit you are creating.

- identify the where (the domains) that this toolkit is most effective in.

Thanks to @duarte_vasco and @galleman for sharing.

I learned something from each of you.