In What is Good Design?, I explore what Christopher Alexander says about good design and what Robert C. Martin says are traits of bad design. Here I look at what Kent Beck has to say about Responsive Design.

Post includes an attempt to rationalize what Alexander, Brooks and Beck say on design. It’s a bit of a mess, but there are good references.

Kent says that good design permits a steady introduction of new features, it’s easy to test and tune for performance. The notion of good and bad design is symmetrical. Both reflect an aesthetic sense (you know it when you see it).

Kent has a talk on Responsive Design. The start of this talk goes into the approach he used when writing the book Smalltalk Best Practice Patterns.

Used a stack of index cards to track design decisions. This included constraints, decision points, etc. to create a powerful reflective tool. This approach showed that he only had four strategies for design.

Kent called this approach Responsive Design. Design gets interesting when you have feedback: how do you design with constraints. The responsive component plays into the feedback–am I going to take the current understanding of the design or am I going to respond to the feedback.

The goal of development is the creation of a steady flow of features. You need to balance the design–not too early and not too late. You need to take careful note of what and when to design. Design principles using the PermaCulture idea: benefically relating elements.

Kent Beck’s values in design:

- simplicity (removing extraneous elements)

- feedback (creating closed loops on the design)

- community (being accessible to the user community)

Patterns are important for implementation and communication. It’s not just design patterns, but smaller patterns.

Principles provide assistance when encountering a novel situation. A paper on “dynamo”–Amazon’s key-value pair storage–has a great description of their principles. Principles rapidly shrink the design space–look for the universal principles. How do you choose these principles?

These strategies are:

- Manage your leaps–move from the current design to the new design. Move your design in safe steps; not big steps.

- Manage large leaps in parallel–operate two designs in parallel for a while helps minimize the size of a leap. This ensures that the system is always working and permits one to see progress.

- Stepping stone–when you can’t get to where you want to go in a safe step. Use something to take you through intermediate steps.

- Simplification–what is the least you can do to move forward? Try to eliminate things that are not germaine in the near term but balance against long term need to change.

- Refactor–refactor of code is important.

- Succession–there are successive patterns that are important to follow. See the paper by Kent Beck “First One, Than Many”. (Isn’t available on-line.)

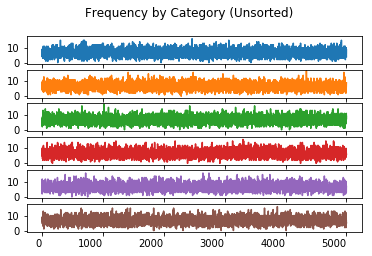

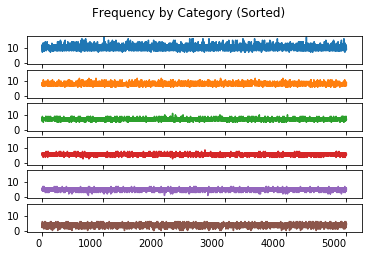

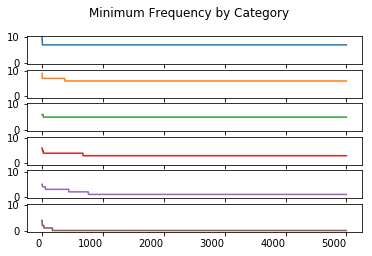

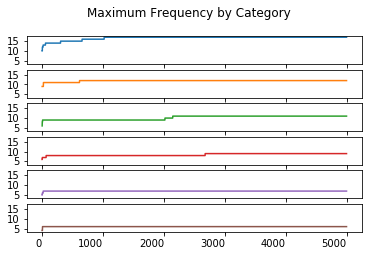

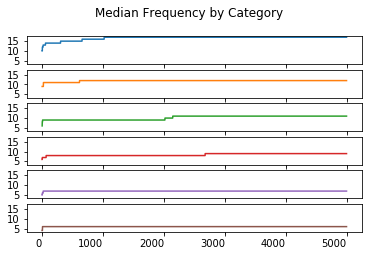

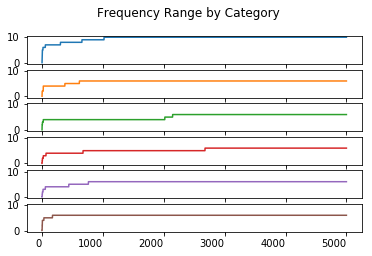

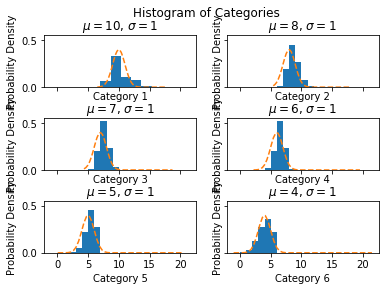

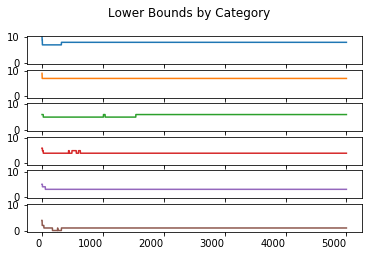

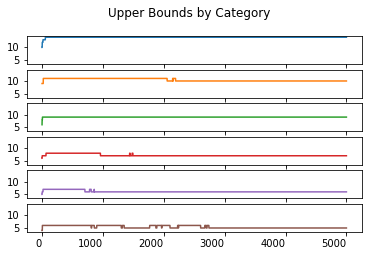

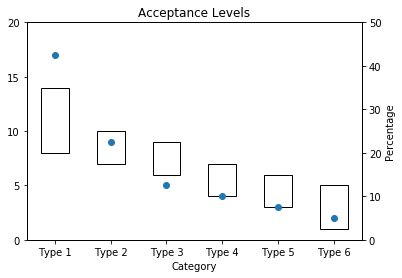

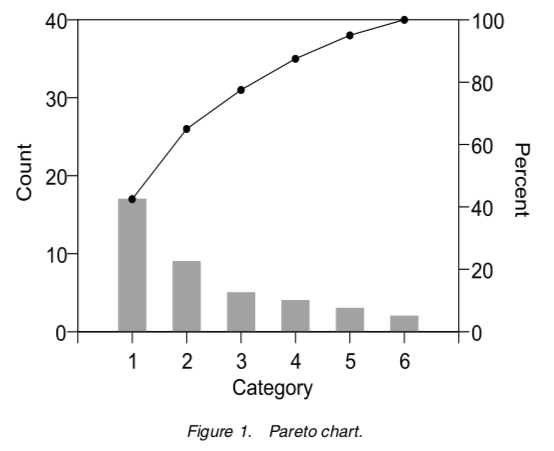

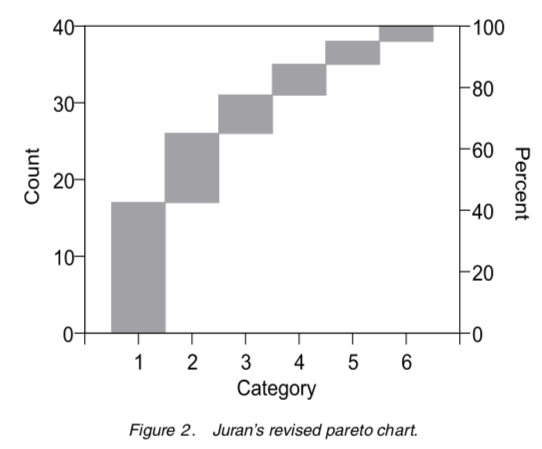

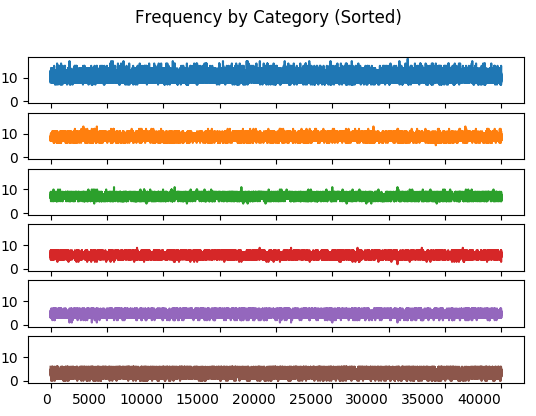

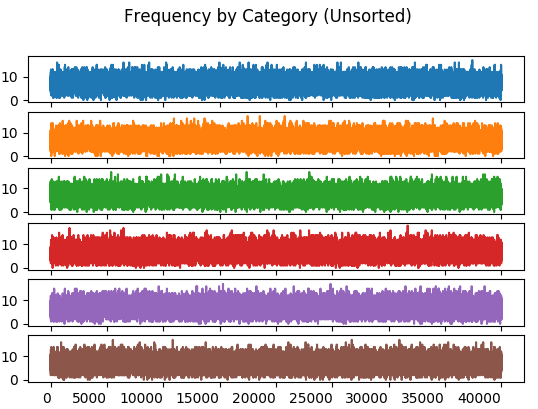

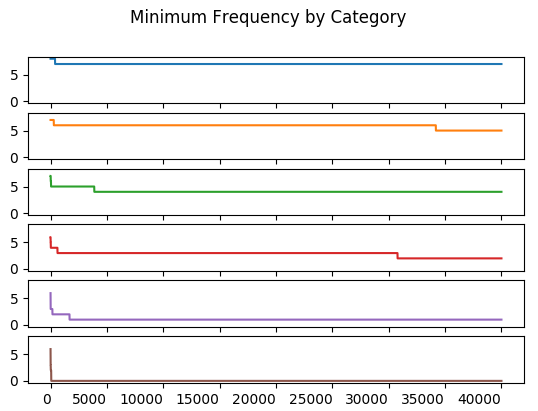

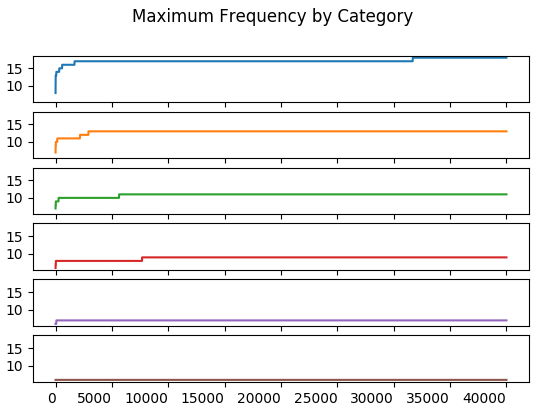

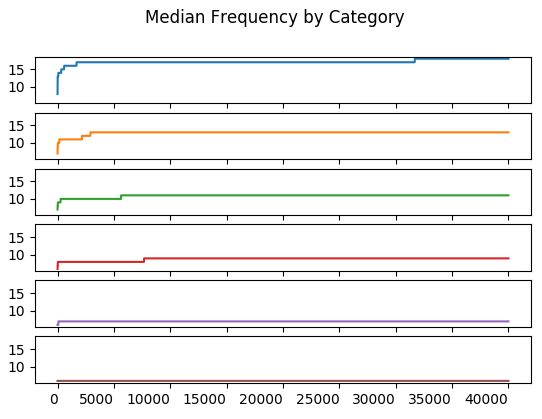

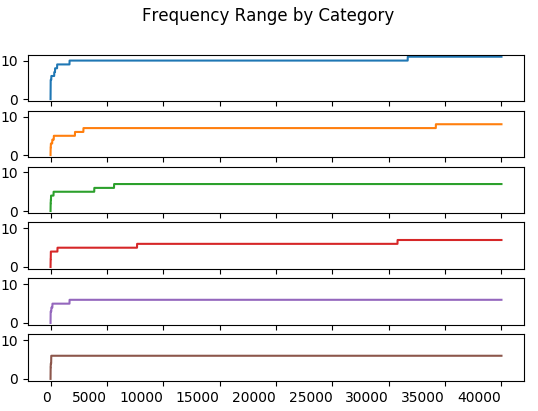

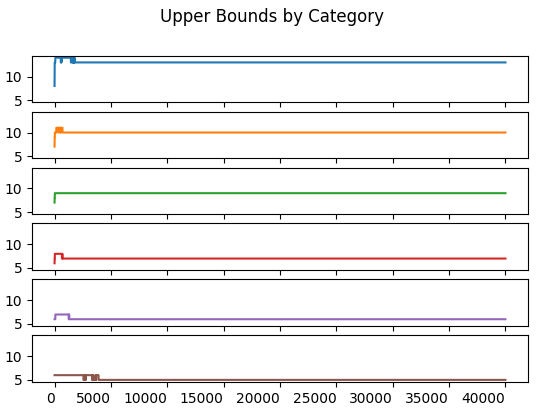

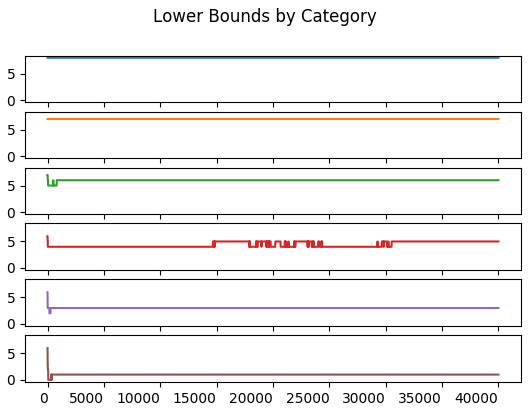

- Data–get data on your system and try to understand what it means.

Rationale design process:

Our illusion of control over software design is a dangerous conceit best abandoned.

Brooks in Design of Design and Alexander walk through the failure of a rationale design process. Brooks focuses on the need for iteration and feedback. Alexander focuses on the combinatorial explosion of options arising from even the simplest design tasks.

See Martin Fowler’s book on refactoring:

- refactoring in the small, easy.

- Refactoring in the large tends to be harder.

- Most of the hard problems in programming turn out to be design problems.

See Alexander:

- hidden structure. How to identify and articulate the deeper hidden structure.

- Responsive Design Project, started in 2005.

No single best design:

But design has a dark side. While there isn’t a single best design for any system, there are many poor designs for that same system.

Design for feedback:

Over-designing early leads to delaying feedback from real usage of the system, makes adding features more complicated, and makes adapting the design more difficult. By the same token, under-designing makes adding features more complicated, increases defects, and makes adapting the design more difficult.

You read somewhere that “fit” in design, according to Alexander doesn’t work in software. I think that’s the wrong way to look at it. Fit is about appropriateness to form and context. Fit in software includes form and context.

Consider the Singleton pattern. There are good examples on when to employ this pattern and when not to. If you employ the pattern you better be aware of the double-checked lock pattern if the singleton is used with multiple threads. If you employ the pattern in a single-threaded environment then you don’t need the lock.